- feature

- FORENSIC SERVICES

How CPAs can combat the rising threat of deepfake fraud

Faked, live video and audio of executives can fool employees and cost companies millions, but accountants can take steps to mitigate the risk.

Related

AI early adopters pull ahead but face rising risk, global report finds

COSO creates audit-ready guidance for governing generative AI

AI loses ground to pros as taxpayers rethink who should do their taxes

Imagine you receive an email invitation from your boss to attend a video call. The email is from the headquarters office regarding a “confidential transaction.” You accept the invitation and join the call, in which the company CFO tells you to transfer 200 million Hong Kong dollars ($25.6 million) across 15 transactions, using five Hong Kong banks. And you do it because the people in the video call look and sound exactly like you remember them.

Only later do you realize you fell victim to a deepfake — the people you recognized in the video call were digital clones of your colleagues that fraudsters created with the help of artificial intelligence (AI). And the destination of the money transfer was the fraudsters’ pockets.

This isn’t a hypothetical example of a deepfake, the potent, fast-spreading, and high-tech form of financial scam. It happened to a finance professional working for British multinational design and engineering firm Arup in Hong Kong. The company reported the incident to Hong Kong police in January 2024.

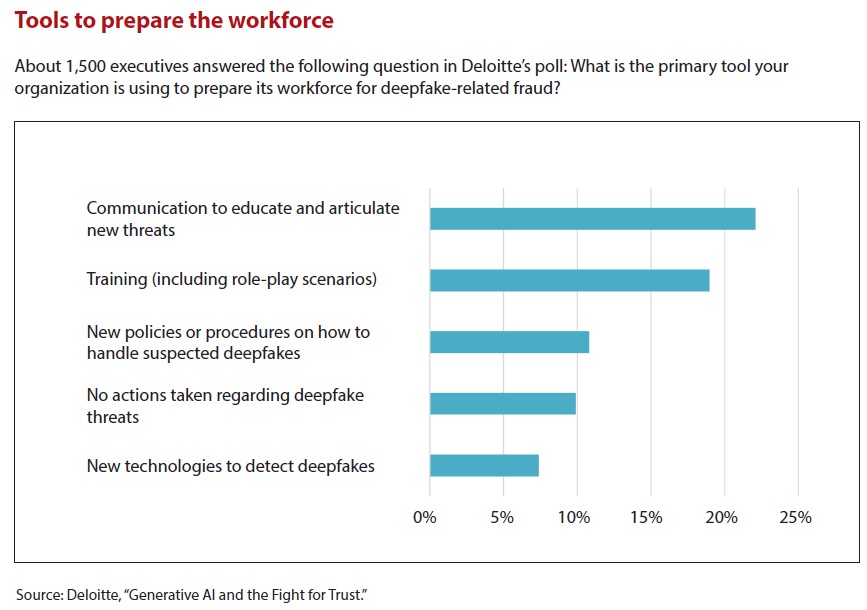

Shortly after news outlets across the world made the incident public, Deloitte polled more than 1,000 executives on their experiences with deepfake attacks. Nearly 26% said their organization had experienced one or two in the previous 12 months. And more than half of the respondents (51.6%) expected the number and size of deepfake fraud attacks targeting their organizations to increase over the subsequent 12 months.

“We hear about more and more of these on a regular, everyday basis,” said Jonathan T. Marks, CPA/CFF/CITP, CGMA, a partner in BDO’s Forensic Accounting & Regulatory Compliance practice. Finance and banking, he said, are key targets because of their access to money and data.

In November 2024, the increasing number of reported deepfake fraud attacks prompted the U.S. Treasury Department’s Financial Crimes Enforcement Network (FinCEN) to alert financial institutions and help them identify the fraud schemes. (See the sidebar, “The Year Deepfakes Became Commonplace.”)

Other authorities and experts are raising the alarm, too.

“If people are not paying attention to this, it might be too late when an incident happens — especially with the speed at which information can get moved, funds can get transferred,” said Satish Lalchand, a principal in Deloitte Transactions and Business Analytics LLP who specializes in fraud detection and AI strategy.

But finance professionals can take action to mitigate the deepfake-fraud risk. The Deloitte poll, for example, highlighted what respondents said they have done. (See the graphic, “Tools to Prepare the Workforce.”)

FORMS DEEPFAKES CAN TAKE

Can you spot a deepfake?

It’s sometimes possible to identify a deepfake by observing it closely. AI-generated images may have incongruities, such as a person with too many fingers or a nonsensical layout for a building. Synthetic video or audio of a person may simply feel off in a way that’s hard to pinpoint.

“It’s lighting, it’s cadence, it’s these certain things” that can betray an imposter, Marks said.

Deepfakes are digital imitations of images, videos, documents, and voices fraudsters create with generative AI. They gained widespread public attention through head-spinning viral videos — like an eerily accurate digital clone of Tom Cruise, complete with convincing facial expressions and body language.

And deepfakes are advancing just as fast as AI. The technology is becoming more widespread and easier to deploy. The advances can make the tells that something is a deepfake more subtle, and scammers can mask flaws by transmitting the video in lower resolution or by inserting digital noise and compression artifacts (distortions that can appear when video is compressed).

The increasing power and accessibility of deepfake technology also means that attackers can pursue a wider range of victims.

Previously, a convincing fake might have been feasible only if the subject person was featured in a large amount of audio and video recordings, but experts say the fakes now can be generated with far less source material. That could make it easier to target executives at smaller companies, even if there isn’t much video or audio publicly available.

Deloitte’s Center for Financial Services estimated that AI-generated fraud will reach $40 billion in damages in the United States by 2027, a 32% annual rate of increase from $12.3 billion in 2023.

“Gen AI tools have greatly reduced the resources required to produce high-quality synthetic content,” FinCEN reported in its November alert.

For example, in March 2024, OpenAI, the company that developed ChatGPT, reported that its voice engine technology could generate “natural-sounding speech that closely resembles the original speaker” with just a 15-second sample.

OpenAI acknowledged the potential abuse of the technology while also highlighting legal and technological guardrails it had put in place. But generative AI advances aren’t just coming from tech giants anymore. As the open-source model DeepSeek has shown, small outfits can create advanced generative AI models.

In other words, you won’t catch every deepfake simply by looking for glitches. But other techniques may still help.

Clay Kniepmann, CPA/CFF/ABV, a forensic, valuation, and litigation principal at Anders CPAs + Advisors, advised keeping an eye out for odd behavior, such as a potential impersonator’s lack of knowledge about the real person.

“It’s knowing who you work with,” Kniepmann said. “Know who that approver is, what their personality is like.”

More broadly, the most reliable defense may be to stay aware of the more universal signs of fraud — unusual and unexpected requests, false impressions of urgency, and stories that don’t stand up to scrutiny.

STEPS FINANCE CAN TAKE TO STOP DEEPFAKE FRAUD

One of the voices sharing insights on deepfakes is Rob Greig, Arup’s global chief information officer, who spoke about lessons learned to the World Economic Forum. Following the deepfake attack, Arup took a deep dive into assessing whether the incident put systems, clients, or data at risk, Greig said in a video interview. The company determined none were compromised in the attack.

“People were persuaded, if you like, deceived into believing they were carrying out genuine transactions that resulted in money leaving the organization,” he said in the WEF interview.

Deception is nothing new. The technology to create deepfakes is, Greig said, adding, “Deepfakes sounds very glamorous, but what does that actually really mean? It means someone successfully pretended to be somebody else, and they used technology to enable them to do that.”

Instead of counting on employees to spot fakes, organizations should consider investments in training and technology to deflect attacks and limit their damage. Kniepmann, Marks, and Lalchand suggested the following steps:

Enhance anti-fraud training and culture

Companies already should be training employees to identify fraud attempts through more common methods, such as email scams.

Deepfakes can be added to this curriculum — making personnel aware of the possibility that a phone call or other contact could be fake. Companies also could start to test how employees respond to mock fraud attempts involving deepfakes, Kniepmann suggested — similar to how many organizations send mock phishing emails.

“It’s training on the possibility of those things,” Kniepmann said. “Conduct test attacks, and see who’s passing, who’s failing, who’s falling for this.”

In general, employees should be reminded to trust their instincts, maintain a skeptical stance, and seek backup in any suspicious situation, Marks said. “When you lower your level of skepticism, the white-collar criminal will take advantage of you.” Creating an anti-fraud culture, he said, is a never-ending endeavor.

“Skepticism is not something that you set and forget,” Marks added. “It’s constantly changing and acclimating based on the circumstances. You just can’t get complacent.”

Assess policies for large monetary and data transfers

Companies should examine their internal controls over who can authorize major financial and data transactions and how that happens.

“I am all for any reason to revisit your internal controls,” Kniepmann said.

Controls should detail who has the authority to initiate and approve transactions. Companies also may consider setting policies about communications: Perhaps requests for money and data transfers should come only through more secure channels.

Marks encouraged the use of platforms with two-factor authentication, making it harder for AI fakes to worm their way into a conversation.

Encourage the use of human connection and ‘tradecraft’

One of the best defenses against AI may be human connection. If employees know each other well, they’re more likely to spot a deepfake.

“Fraudsters often exploit publicly available information [to steal an identity], but they can’t replicate the nuance of personal shared experience,” Marks said.

Employees can even be trained to specifically check and challenge the authenticity of their conversational partners — a practice Marks refers to as tradecraft. Tradecraft can include checking knowledge of business and personal topics.

Companies also can use secret codewords to ensure an impostor hasn’t entered the conversation.

“You’re adding a little friction, but you’re layering on security,” Lalchand said.

Embrace AI-detection technology

Tech companies are racing to deploy technologies that can detect AI fakery. Some tools incorporate directly with video platforms like Zoom, while others can check that a connection is secure and appears to trace back to the appropriate geographical location, Lalchand said.

Businesses also are fighting identity fraud more broadly with new identity verification platforms that tap into multiple sources of data so they can’t be defeated by a single fake document.

“The real key here is to stay proactive, investing in detection tools, in validating authenticity,” Marks said.

A growing number of vendors offer tools to analyze prerecorded video files, among them DuckDuckGoose AI, Deepware Scanner, and Google SynthID. One such tool, Reality Defender, can even spot fakes in real-time videoconferences.

Assess your vulnerabilities

While there are many strategies for fighting deepfakes, they should all begin with an assessment of a company’s fraud risk profile, the experts agreed.

“White-collar criminals are profiling you, and you should be profiling them,” Marks said. “For an organization to really get started, you have to do a risk assessment.”

In the face of a constantly changing threat, every organization should be reinforcing its defenses against fraud of all sorts.

“You’re only as strong,” Lalchand warned, “as the weakest link in your organization.”

The year deepfakes became commonplace

The number of AI-generated deepfakes increased fourfold from 2023 to 2024, accounting for 7% of all fraud in 2024, according to an analysis by Sumsub, a UK company that makes identity verifying technology.

The country with the largest increase in deepfake attacks was South Korea, but the Subsum analysis also found attacks significantly spiked in the Middle East, Africa, Latin America, and the Caribbean.

Deepfake examples reported worldwide in 2024 included:

- A video of her boss that tricked a finance employee in China’s Shaanxi province into transferring 1.86 million yuan ($258,000) into an account fraudsters had established. According to the China Daily, a phone call to her boss to verify the transaction revealed the fraud and police were able to arrange an emergency freeze of much of the money transfer.

- Images that suggested Hurricane Milton flooded Walt Disney World. The images were shared by a Russian news agency and circulated widely on social media.

- Fake images of earthquake survivors in Turkey and Syria that scammers circulated on social media to channel donations into their PayPal accounts and cryptoasset wallets, the BBC reported. The magnitude 7.8 earthquake in February 2024 killed more than 50,000 people, damaged at least 230,000 buildings, and left millions without heat or water.

About the author

Andrew Kenney is a freelance writer based in Colorado. To comment on this article or to suggest an idea for another article, contact Jeff Drew at Jeff.Drew@aicpa-cima.com.

LEARNING RESOURCES

Fraud Risk Management Guide, 2nd Edition

An update to the original version released in 2016, the second edition addresses more recent anti-fraud developments, revises terminology, and adds important information related to technology developments — specifically data analytics. It is intended to give organizations of all sizes across all industries the information necessary to design a plan specific to the risks for that entity.

PUBLICATION

Forensic & Valuation Services Conference

Attend this forum for timely updates, cutting-edge information on new technology, and quality networking with other forensic accounting and valuation professionals. Join us at the Gaylord Rockies Resort & Convention Center in Aurora, Colo., or live online.

Oct. 27-29

CONFERENCE

Fraud prevention is on the agenda at the biggest event in the accounting profession, AICPA & CIMA ENGAGE 25, to be held at the ARIA in Las Vegas. Don’t miss it!

June 9-12

CONFERENCE

For more information or to make a purchase, go to aicpa-cima.com/cpe-learning or call 888-777-7077.

AICPA & CIMA MEMBER RESOURCES

Articles

“AI and Fraud: What CPAs Should Know,” JofA, May 1, 2024

“The Role of Artificial Intelligence in Forensic Accounting and Litigation Consulting,” AICPA & CIMA, April 21, 2024